AB testing

AB testing is one of the most essential part in modern data driven companies. Everyone need it and talk about it, but I find there are many trivial and tricky details that not everyone understand. I will go through the theoretical and pratical parts of AB testing.

Statistical hypothesis testing

A statistical hypothesis test makes an assumption about the outcome, called the null hypothesis. 1

For example, we have a test to improve the CTR of user actions. So normally there will be 2 groups, one for control and the other for test. The null hypethesis is there is no change in user behaviors, the test and control group user action distribution is the same.

In contrast, there is an alternate hypothesis. This means there is a difference between the 2 groups, there maybe CTR gain or loss. In short,

Null hypothesis (H0): there is no difference between the two groups – abtest failed

Alternative hypothesis (HA or H1): there is a difference between the two groups – abtest succeed (Suppose the result is positive)

Please remember all the test result is a statistical distribution, as what we listed below.

P-value

P-value2 is the core idea when we talk about the outcome of an ab test. It’s

the probability of observing the result given that the null hypothesis is true.

how likely it is that your data could have occurred under the null hypothesis.

a proportion: if your p value is 0.05, that means that 5% of the time you would see a test statistic at least as extreme as the one you found if the null hypothesis was true – How likely your data could happened in control group

Along with p-value, there is a relevant concept named significance level(alpha).

It’s the boundary for specifying a statistically significant finding when interpreting the p-value

p <= alpha: reject H0, we think the result unlikely comes from the null hypothesis. They belong to different distributions – result is significant

p > alpha: fail to reject H0, same distribution – result isn’t significant

Two types of errors

P-value is just a probability and in actuality the result can be wrong even it’s significant3.

Type I Error. Reject the null hypothesis when there is in fact no significant effect (false positive). The p-value is optimistically small – result is significant but actually it’s not

Type II Error. Not reject the null hypothesis when there is a significant effect (false negative). The p-value is pessimistically large – result isn’t significant but actually it’s significant

Statistical power

the power of a hypothesis test is the probability that the test correctly rejects the null hypothesis – How likely we can find our result is significant

The higher the statistical power for a given experiment, the lower the probability of making a Type II (false negative) error

Power = 1 - Type II Error

The relationships are illustrated below.

On the left is the significance range for null hypothesis

On the right is the power range for alternative hypothesis

In the intersection area is where the errors happen

The blue dark area means the Type I error

The green dark area represents the Type II error, the result is significant but it falls to the un-significant area of H0

Power Analysis

After going through the basic concepts, let’s learn the foundamental process for ab testing analysis.

Power analysis involves estimating one of these four parameters given values for three other parameters.

Effect Size

The quantified magnitude of a result present in the population. Effect size is calculated using a specific statistical measure, such as Pearson’s correlation coefficient for the relationship between variables or Cohen’s d for the difference between groups – How much gain we expect

Sample Size

The number of observations in the sample – How many user samples we need

Significance

The significance level used in the statistical test, e.g. alpha. Often set to 5% or 0.05 – Threshold for success

Statistical Power

The probability of accepting the alternative hypothesis if it is true – Ability to find success

The most common use of a power analysis is in the estimation of the minimum sample size required for an experiment. There many tools we can find in the internet, like this sample size calculator.

Understand the relationship

To get a deep understanding for the 4 parameters, we need to further dive into the relationships between each other.

Sample size vs power

In fact, our result is a sampling of the original distribution.

For this distribution, it has a feature that higher the sample size, narrower the shape.4 – higher power

Suppose we have these 2 distributions

For H0, the center is -2

For H1, the center is 2

After increasing the sample size, these 2 distributions will both get narrower and higher

The area of blue dark part will remain the same, and the center will also be the same

We can easily figure out the power will increase

Effect size vs power

Higher the effect size, the 2 distributions will be farther from each other – higher power

In the picture, we move the center of H0 from 2 to 4.

Alpha vs power

Higher the alpha – higher power, high Type I error

We can increase alpha, but it also means we are increasing the probablity of making Type I error

The blue dark area is increasing

There is no free lunch

Subtle details

We already covered the core concepts in power analysis. Now let’s visit some subtle and tricky details that only few people noticed.

Low sample size vs low power

When we have a small sample size but get a significant result. The result is mostly likely a false positive, and it can be highly exaggerated.

This is called winner’s curse5

It frequently happened in low sample size tests like new user tests

As illustrated below

The center of H0 is -2, but in the low sample size test, we see a significant result. The result will be > 1 and falls in the dark blue area

When we have larger sample size, even if the result is significant, the value will be < 1

According to this doc6, the actual exaggrating ratio can be super big.

False positive risk

If we declare a result to be “significant” based on a p-value after doing a single unbiased experiment, it can be fake.

The False Positive Risk7 is the probability that your result is in fact a false positive depends on the prior probability that your idea will be successful.

You may wonder what’s the difference between FPR and Type I error?

It’s a summarized number for all positive tests, not for a single test

Let's see a concrete example

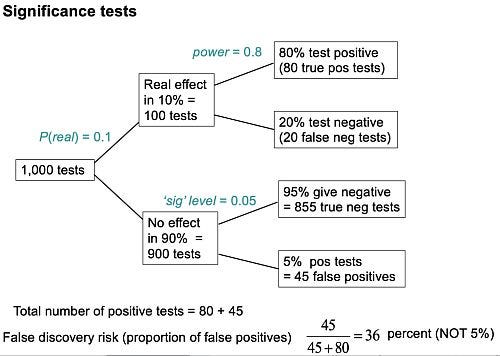

We have ran 1000 tests

The real positive rate is 10%, the real nagative rate is 90%

Among the real nagative tests, we have a significant level 5%, so there will be 45 false positive test results

And among the real positive tests, we have a power level 80%, so there will be 80 true positive test results

The false positive risk will be 36% not our significance level 5%

This result may looks super suprised at first, but it’s make sense.

There are more concrete numbers from industry.8

That’s why people are saying to raise the bar of p-value

Redefining statistical significance (Benjamin, et al. 2017) threshold to 0.005 for “claims of new discoveries.”

One way to achieve a lower p-value is to do a replication run. A simple approximation for combining two p-values is simply: p1*p2*2 (so 0.05*0.05*2=0.005).

FAQ

The last part will be a quick QA session.

The right way of conducting ab testing

Planning

Create an ab testing plan, including the hyphothesis and approach

Select successful metrics, like CTR/CVR/Timespent

Calculate the sample size based on the primary metrics

Let relevant DS, PM or engineerings to review the plan

Active

Allocate users for a certain period, this depends on your user behavior cycles. In news, it can be 1 week

Wait until the test period to be finished unless there is a significant negative impact

Review

Run a post test analysis and give the impact on metrics

Send the result to stakeholders and make a decision

Release

Announce the decision and release the traffic to all users

Why no one calculate sample size

Never see people calculate sample size in ab tests?

People don’t know how to do this

They have convert sample size to test period based on their experience. After the test period, the sample size will be enough

1 week for certain test

2 weeks for another test

How to know effect size beforehand

If we have experience, just follow

If no experience, make a guess 😆. Can refer to other open data in industry.

Pre-bias

It's very likely to have pre-bias for any tests, especially for a small traffic

We can eliminate the pre-bias in the final result using correction methods

Small sample size

Winner’s curse happens all the time

Wait for more sample size then check

Re-test, at least have a hold back group

Require higher p-value

Significant non-target metrics

What if our target metrics like CTR is neutral but non-target metrics like push open rate is significant?

If only watch 1 metrics, the false positive probability is 5%. If watch 10 metrics, the probability will be 1 - 0.95^10 = 40%

Most likely it’s a noise and the sample size for open rate could be quite small

Do not put much hope on non-target metrics if there is no relevant hypothesis

Simply re-test can verify the result

https://machinelearningmastery.com/statistical-power-and-power-analysis-in-python/?utm_source=pocket_saves

https://www.scribbr.com/statistics/p-value/

https://zhuanlan.zhihu.com/p/495005605

http://psychology.emory.edu/clinical/bliwise/Tutorials/CLT/CLT/fsummary.htm

https://statisticaloddsandends.wordpress.com/2020/09/01/winners-curse-of-the-low-power-study/

https://docs.google.com/document/d/1sRKParLv0UdOsdAJDTKxPRJstkEDP2Rg/edit

http://www.dcscience.net/2020/10/18/why-p-values-cant-tell-you-what-you-need-to-know-and-what-to-do-about-it/comment-page-1/

https://onedrive.live.com/view.aspx?resid=8612090E610871E4!415400&ithint=file,docx&authkey=!ACert2pUTlADS44